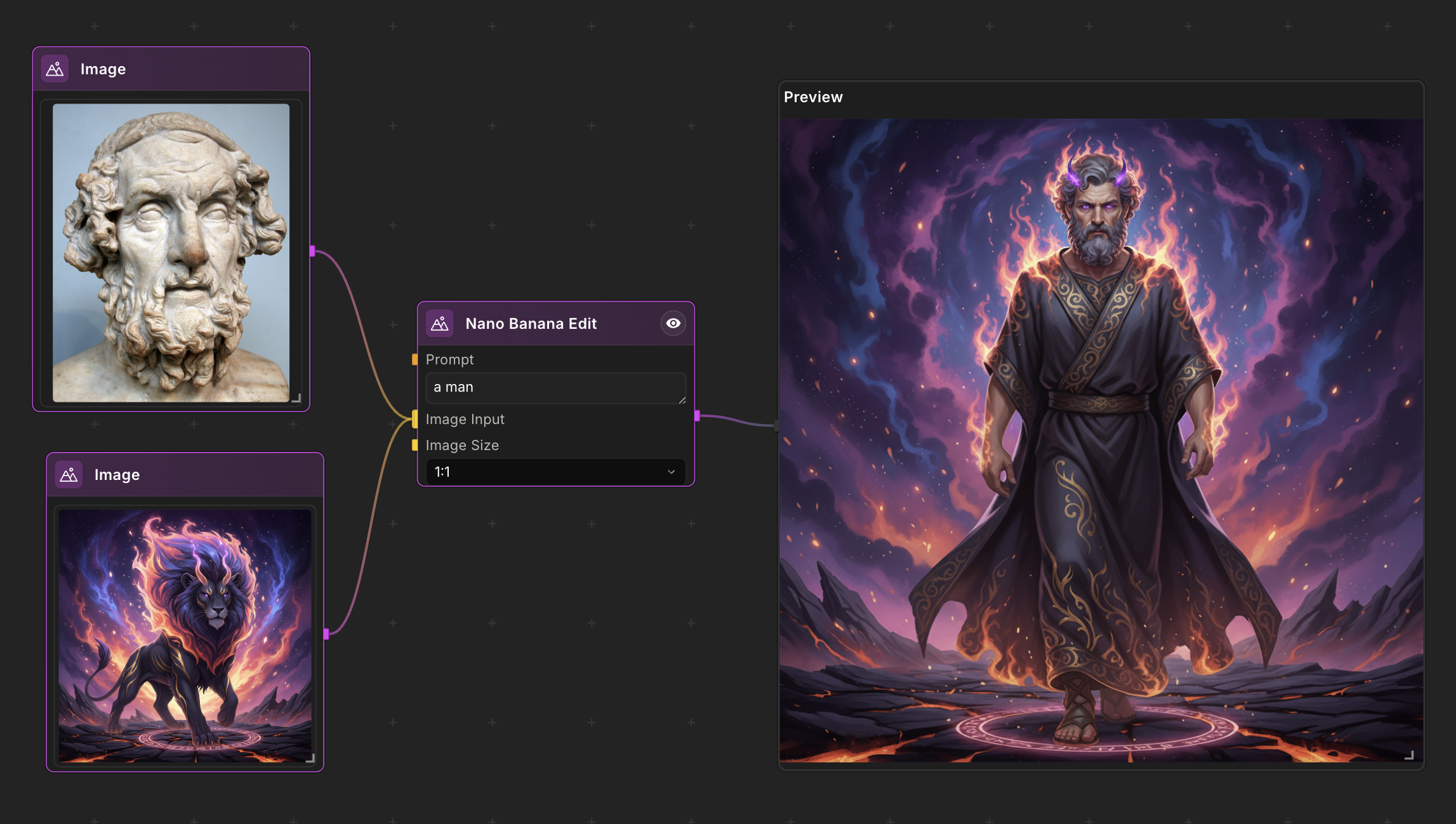

NodeTool: The Unified Visual AI Workflow Builder

NodeTool combines the node-based flexibility of ComfyUI with the automation power of n8n. Build advanced LLM agents, RAG pipelines, and multimodal data flows on your local machine.

The Visual Orchestrator for AI Workflows

- •Visual Workflow Automation — A powerful alternative to n8n for AI-specific tasks. Orchestrate LLMs, data tools, and APIs in a unified drag-and-drop canvas.

- •Generative AI & Media Pipelines — Similar to ComfyUI, but for more than just images. Connect nodes for video generation, text analysis, and multimodal processing without code.

- •Local-First RAG & Vector DBs — Like Weaviate or Pinecone but local-first. Index your documents, build semantic search pipelines, and run RAG workflows entirely on your machine.

- •Autonomous AI Agents — Build agents that can search the web, browse sites, and use tools. Create multi-agent systems that solve complex tasks autonomously.

Open Source AI Utility • Privacy-First Architecture • Supports macOS, Windows, Linux